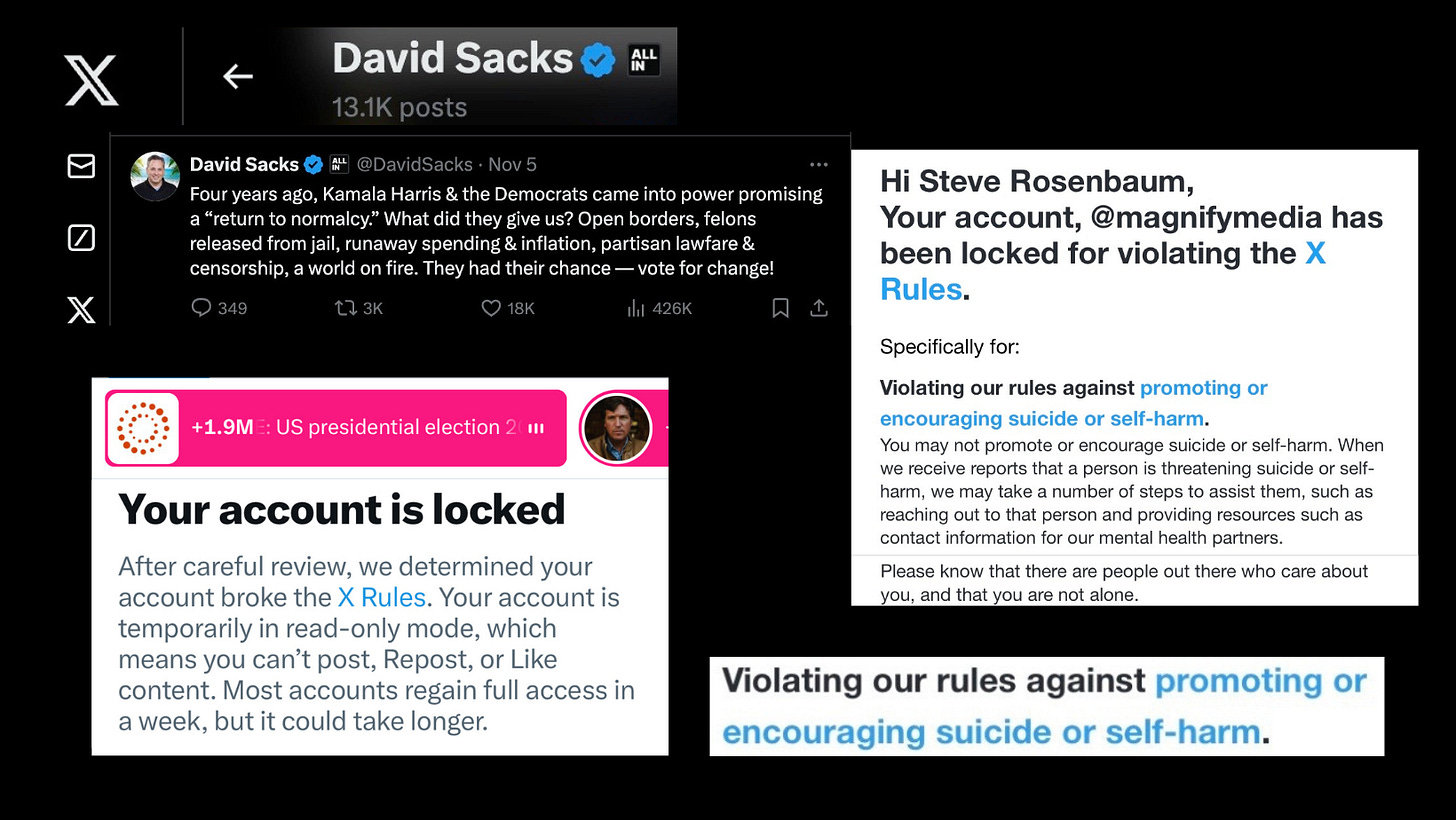

wasn't how I planned to spend one of the most significant evenings in our democratic process. Instead of refreshing my feed for election updates, I was staring at a suspension notice on my X account. In all my years on social media - and I mean every platform I've ever used - I had never been banned. Not once. The shock of it hit even harder given the timing.

Just moments earlier, I had responded to David Sacks's post comparing election candidates. My comment focused on healthcare policy, specifically addressing the dangerous suggestion of using bleach as a COVID-19 treatment that had emerged during the pandemic. It was straightforward historical context, nothing more.

"After careful consideration," the notice read. Careful consideration that somehow took mere seconds. The stated reason? They claimed I had suggested self-harm or suicide - something I absolutely had not done. The disconnect between my actual comment and their interpretation was baffling.

The Algorithm's Verdict

What I experienced that night, as Shoshana Zuboff might argue, was more than just a moderation decision - it was what she calls "surveillance capitalism" in action. An automated system, optimized for control rather than understanding, had made an instant judgment about the bounds of acceptable discourse.

The speed was telling. Real human moderation requires time - time to read, understand context, and make reasoned decisions. This wasn't careful consideration; this was what Emily Bell, founding director of the Tow Center for Digital Journalism, describes as "algorithmic governance" - where machines, not humans, increasingly control our public sphere.

From Public Square to Private Kingdom

But this isn't just about algorithmic moderation. As Yochai Benkler points out in "Network Propaganda," we're witnessing the transformation of what was once positioned as humanity's digital commons into a private platform for influence. The consequences are far-reaching:

Selective Enforcement: Moderation policies appear increasingly aligned with the owner's political viewpoints rather than consistent community standards

Shadow Banning: Subtle suppression of certain viewpoints through reduced visibility, creating an illusion of open discourse while effectively silencing specific perspectives

Verification as Control: The monetization of basic features like verification has created what Kate Klonick calls "The New Governors" - a multi-tiered system of speech where amplification is tied to payment

Data Transparency: Critical research tools and API access have been restricted or paywalled, limiting public understanding of information flows and manipulation

The Fragmentation of Public Discourse

As Eli Pariser predicted with his concept of "filter bubbles," our digital town square isn't just becoming privatized - it's splintering. Communities are retreating into smaller, disconnected spaces: alternative platforms, private Discord servers, closed Telegram groups. While this fragmentation might protect some communities from arbitrary moderation, it comes at what danah boyd calls "the cost of networked manipulation" - the loss of cross-pollination of ideas and genuine public debate.

Four Critical Transformations

Through the lens of my election night ban, we can see four fundamental shifts that scholars have identified in our information landscape:

MSM Decline vs Social Media Rise Traditional media outlets have lost their authority and reach, while social platforms have become the dominant force in shaping public opinion and distributing news.

Echo Chambers & Algorithms Social media algorithms create isolated information bubbles, leading to increased polarization as users are served content that only reinforces their existing beliefs.

Information Crisis The rapid spread of misinformation, coupled with declining trust in both traditional and social media, has created an environment where truth competes with engagement for attention.

Demographics & Change Younger generations, particularly in their media consumption habits, have abandoned traditional news sources in favor of social media, podcasts, and online influencers, leaving traditional media struggling to reach key audiences.

The Decline of Traditional Media Authority What Joan Donovan at Harvard's Shorenstein Center calls "the attention economy" has fundamentally altered how information spreads. When a single tweet can shape public opinion more effectively than a carefully researched newspaper article, we're dealing with what Jay Rosen describes as "the great horizontal" - the flattening of traditional information hierarchie

The Echo Chamber Effect As Zeynep Tufekci argues, social media algorithms don't just organize content - they actively shape our worldview by creating isolated information bubbles. My instantaneous ban is a symptom of what she calls "algorithmic amplification" - where automated systems reinforce division rather than bridge it.

The Information Crisis Charlie Warzel's concept of "reality-warping attention maximization" helps explain how truth now competes directly with engagement. When platforms prioritize "engaging" content over accurate content, they create an environment where misinformation can thrive.

The Demographic Revolution Laura DeNardis's work on internet governance highlights how younger generations have fundamentally different relationships with information and authority, abandoning traditional media for social platforms and online influencers. This isn't just about changing preferences - it's about a complete transformation in how future generations will engage with democratic processes.

The Path Forward

The solution isn't returning to an imagined golden age of media - that's impossible and probably undesirable. Instead, we need what internet governance expert Laura DeNardis calls "democratic digital infrastructure" - new spaces and systems that combine the democratizing power of social platforms with genuine public oversight.

Options worth exploring include:

Democratically governed social platforms

Open-source algorithms for content moderation with public oversight

Digital public infrastructure supported by public funding

User control over their digital identity and data

Decentralized social networks resistant to individual control

My election night ban wasn't just about one comment on healthcare policy - it was a window into what might be the defining challenge of our digital age: how to preserve democratic discourse in an era of privatized digital commons, algorithmic governance, and fractured digital public spaces.

The question isn't whether we'll need new spaces for public discourse - that's inevitable. The question is whether we'll learn from this experiment in privatized digital commons to build something more democratic, transparent, and resistant to both algorithmic and plutocratic control.

Steve Rosenbaum

Thanks for reading - feedback welcome info@sustainablemedia.cente